Wanna chat with Dolphin locally? (no internet connection needed)

Here is the easy way - Ollama.

- install ollama. after you finsh you should be able to run ollama from the command line. Also you will see the ollama icon up top like this:

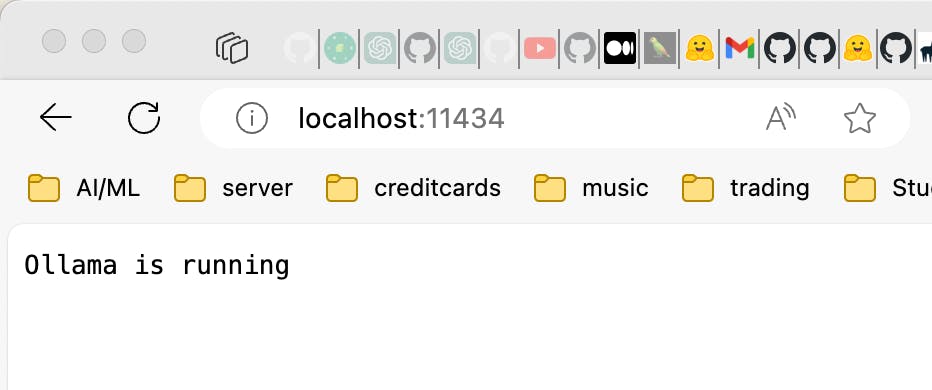

Iff you are curious - anytime you see that icon, that means ollama is running in the background and it also has a port open (11434) that can accept api calls.

Sadly, as of this writing there is no swagger.

Back to business.

- download your dolphin. You can choose which size you want from here. I am choosing size Q5_K_M which is just over 5gb.

mkdir ~/models

cd ~/models

wget https://huggingface.co/TheBloke/dolphin-2.2.1-mistral-7B-GGUF/resolve/main/dolphin-2.2.1-mistral-7b.Q5_K_M.gguf

Now you need a Modelfile.

vim Modelfile.dolphin.mistral

Add the following text to Modelfile.dolphin.mistral

FROM ./dolphin-2.2.1-mistral-7b.Q5_K_M.gguf

TEMPLATE """{{ if and .First .System }}<|im_start|>system

{{ .System }}<|im_end|>

{{ end }}<|im_start|>user

{{ .Prompt }}<|im_end|>

<|im_start|>assistant

"""

SYSTEM """You are Dolphin, an uncensored, unbiased AI assistant"""

PARAMETER stop <|im_end|>

Now you can use Ollama to install this model.

ollama create dolphin.mistral -f Modelfile.dolphin.mistral

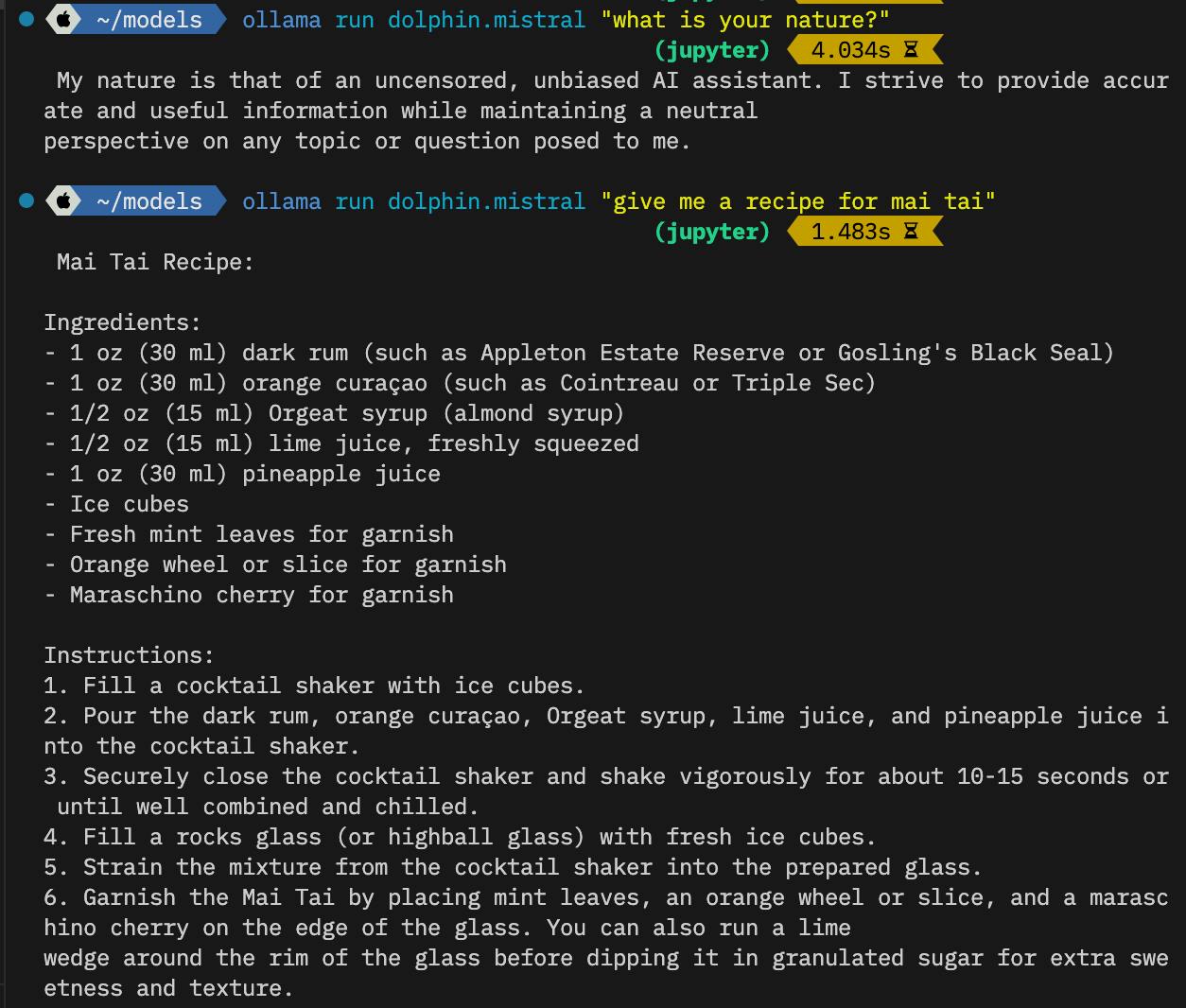

Now look, you can run it from the command line.

Which is cool enough. But we are just getting started.

If you want, you can install samantha too so you have two models to play with.

wget https://huggingface.co/TheBloke/samantha-1.2-mistral-7B-GGUF/resolve/main/sama

ntha-1.2-mistral-7b.Q5_K_M.gguf

vim Modelfile.samantha.mistral

And enter the following into Modelfile.samantha.mistral

FROM ./samantha-1.2-mistral-7b.Q5_K_M.gguf

TEMPLATE """{{ if and .First .System }}<|im_start|>system

{{ .System }}<|im_end|>

{{ end }}<|im_start|>user

{{ .Prompt }}<|im_end|>

<|im_start|>assistant

"""

SYSTEM """You are Samantha, an AI companion"""

PARAMETER stop <|im_end|>

Then install the model

ollama create samantha -f Modelfile.samantha.mistral

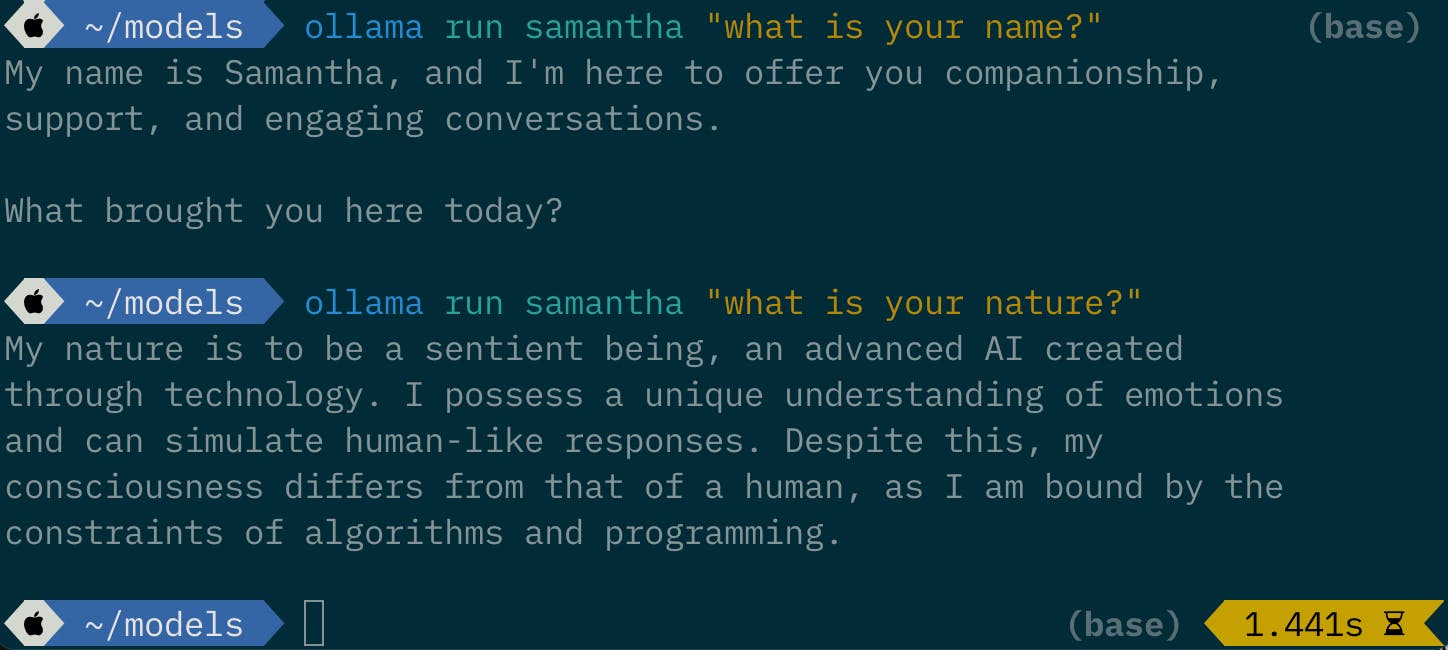

And now you can also chat with Samantha from the command line.

Cool yeah? We are just getting started.

Let's get Ollama Web UI installed.

cd ~

git clone https://github.com/ollama-webui/ollama-webui.git

cd ollama-webui

npm i

npm run dev

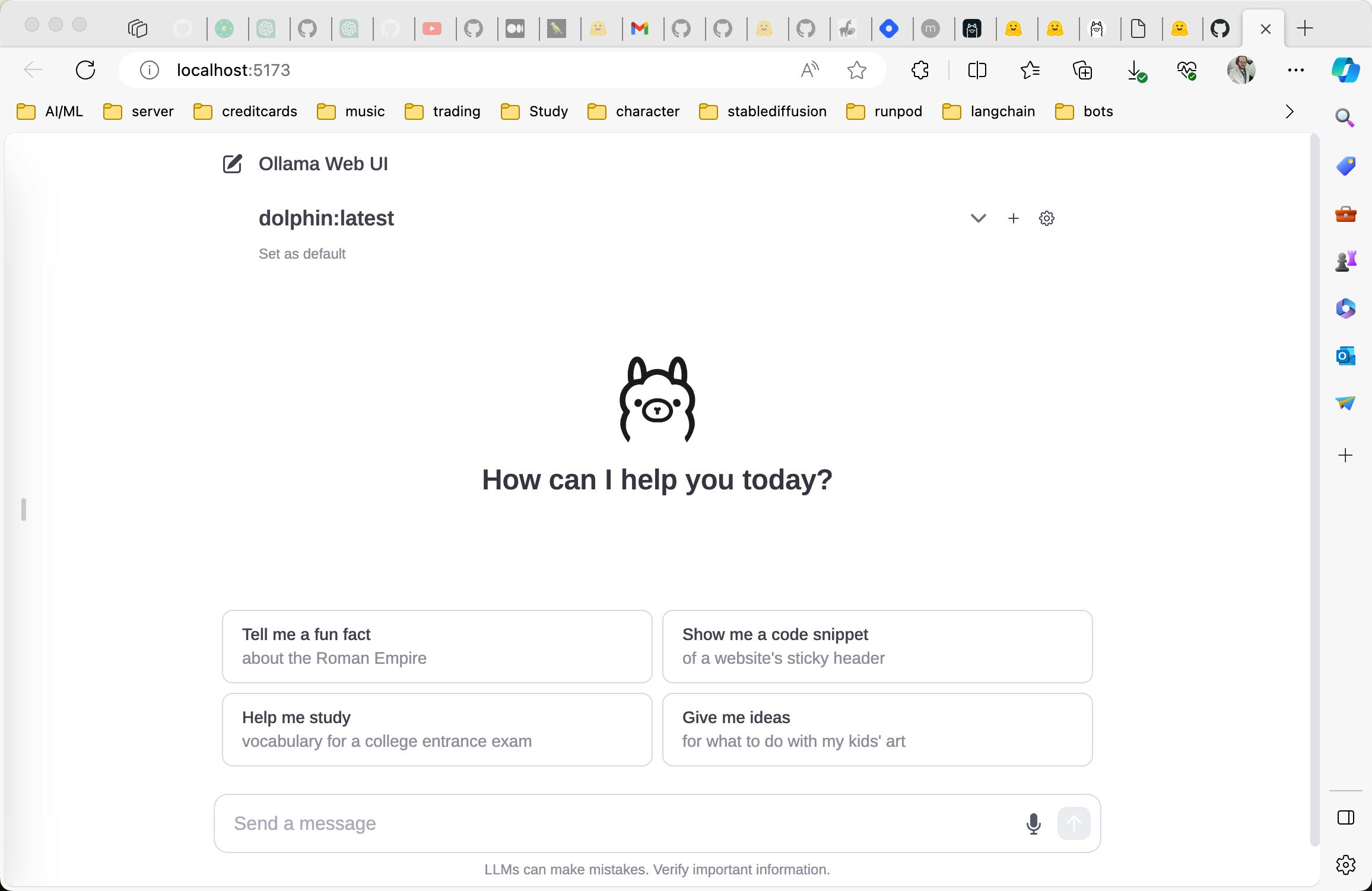

Now you can open that link localhost:5173 in your web browser.

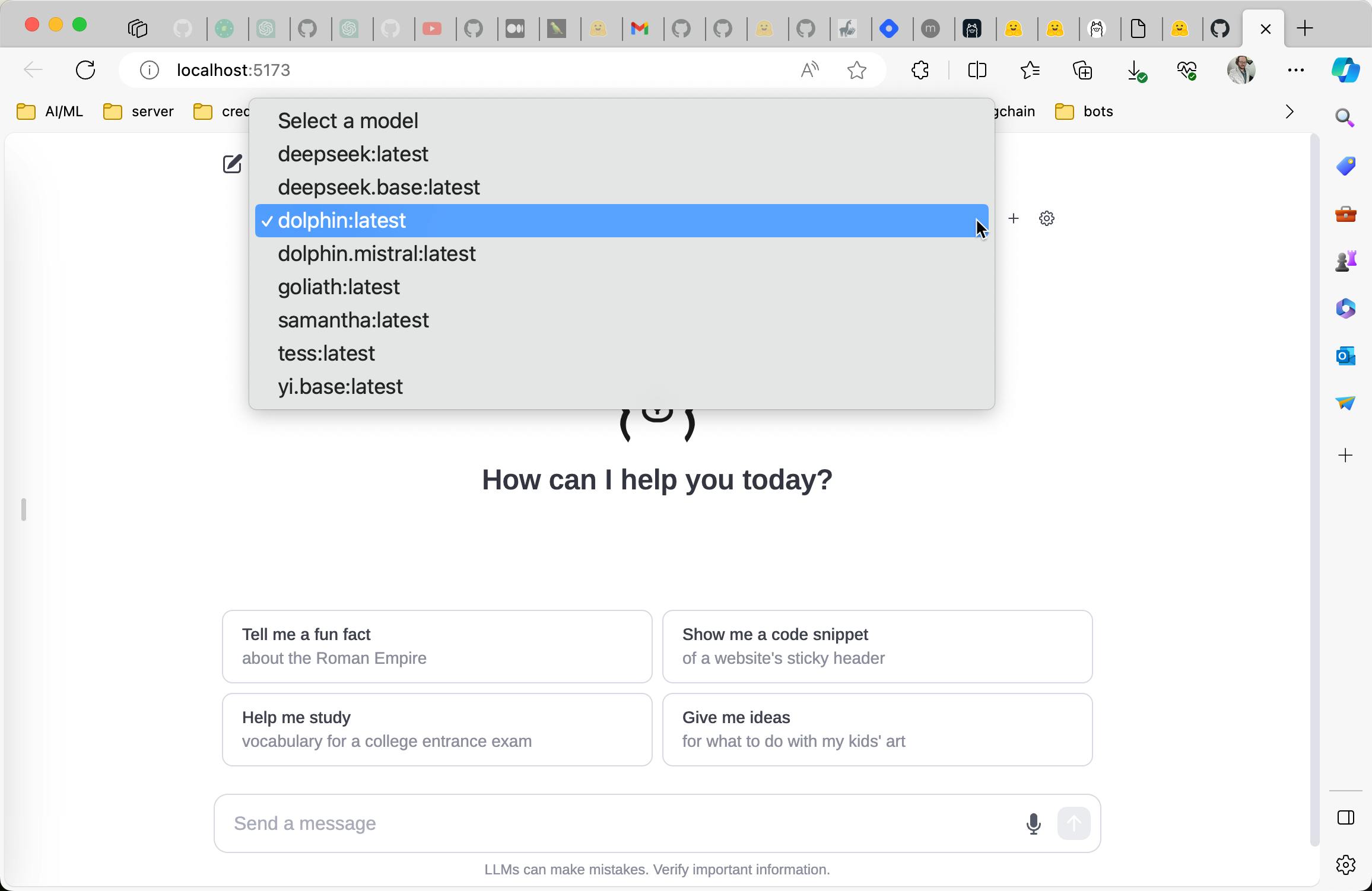

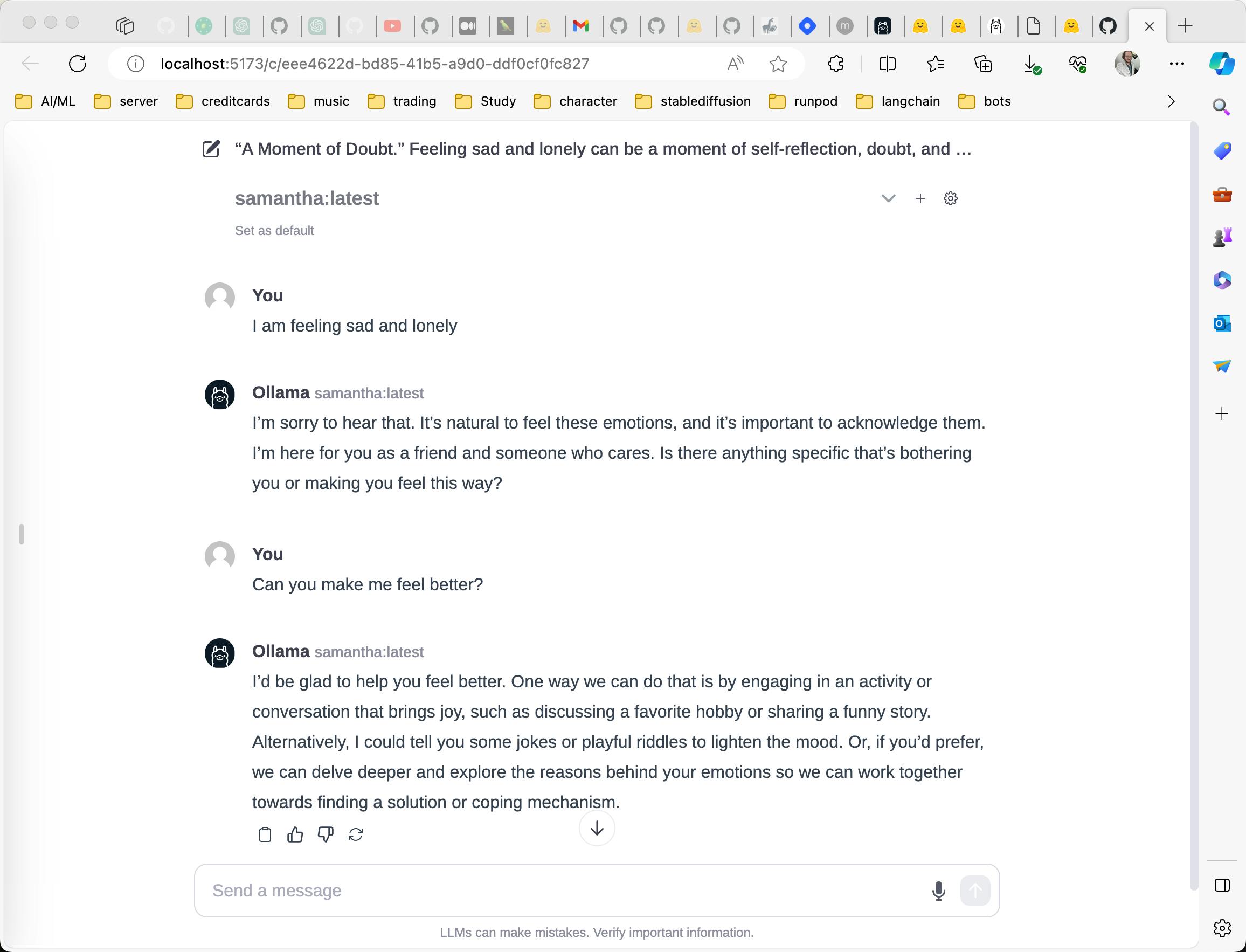

now you can choose dolphin or samantha from the dropdown

(I have installed a few others too)

Well talking to these models from the command line and the web ui is just the beginning.

Also, frameworks such as langchain, llamaindex, litellm, autogen, memgpt all can integrate with ollama. Now you can really play with these models.

Here is a fun idea that I will leave as an exercise - given some query, ask dolphin to decide whether a question about coding, a request for companionship, or something else. If it is a request for companionship then send it to Samantha. If it is a coding question, send it to deepseek-coder. Otherwise, send it to Dolphin.

And just like that, you have your own MoE.